Some Things Sundry #6

Normally, the Some Things Sundry series on this newsletter starts off with a “An eclectic collection of thoughts and links that I found interesting this past week, which I have nowhere to dump than here.” introduction and proceeds with 4-5 interesting links and perhaps a musing. This time, the quantity of links and musings are inverted! This would be a good time for me to express just how much I appreciate all of you for tolerating and indulging me and my meandering brain.

Honestly, these should just have been tweets!

This too shall pass

I got into tech soon after the dotcom bust, and the AI cycle today seems more and more like the nineties dotcom boom. As in, LLMs are like the early internet. The technology works and is magical, but how to deploy it usefully at scale is still the question. In the late nineties there was “dark fiber” laid in anticipation of the internet continuing to grow exponentially, that only after the bust proved useful and paved the way for the internet to grow again in late 2000s (web 2.0, mainstreaming of ecommerce, etc). Historically, the main bottlenecks in the advancement of AI or ML has been large amounts of labelled data, and compute (and perhaps energy in the future). The dark fiber of AI being laid right now is in more powerful chips and more powerful devices at our fingertips, and more labelled data available for training than ever before. A fundamental truth of cycles is that they end: this one will too. The next generation of AI is likely to be something beyond LLMs that need even more data and compute and the data and the compute will be (cheaply) available for it.

What my mom thinks I do vs What I actually do

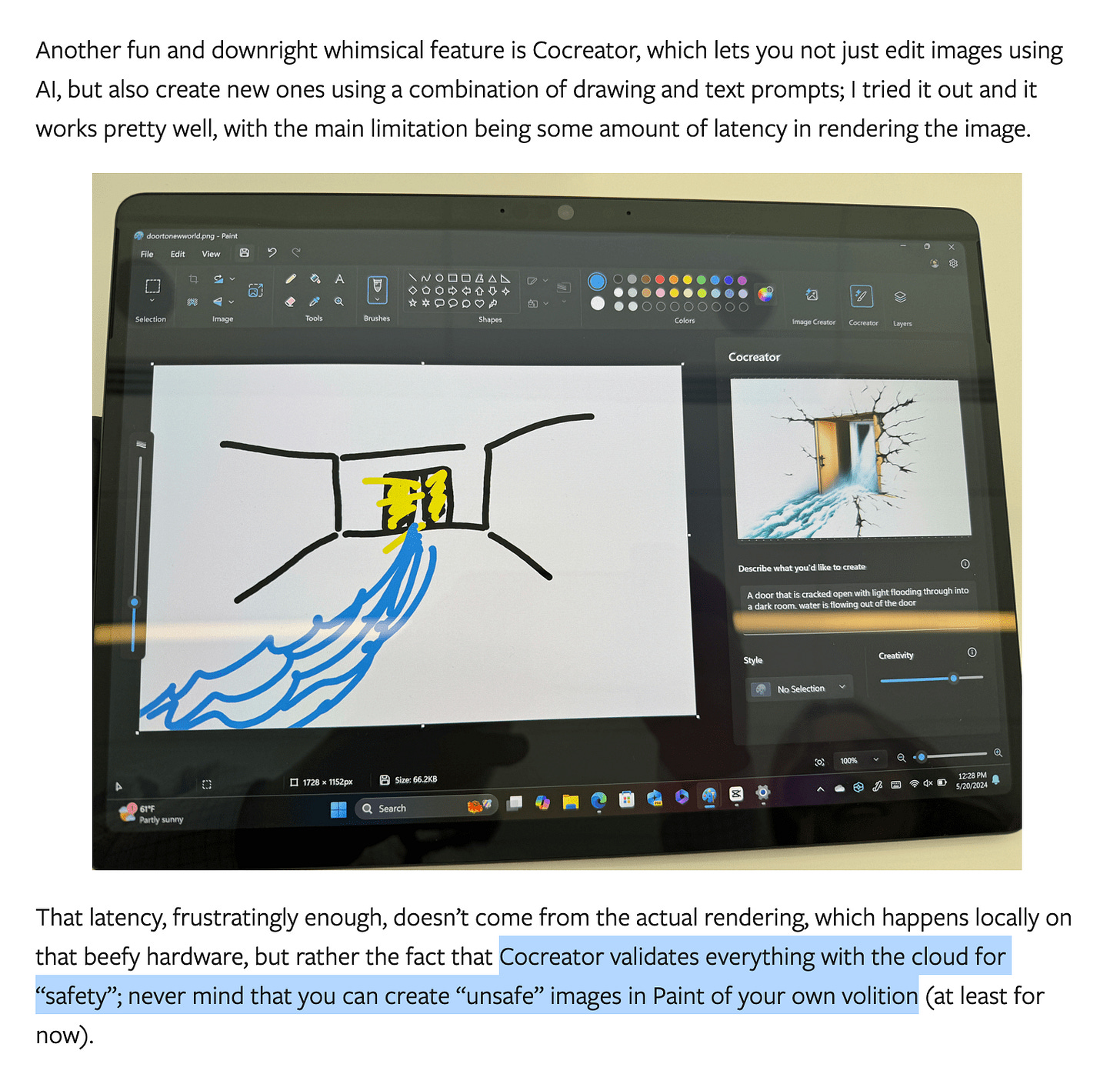

AI safety is such a loaded term that can be made to mean what you want. On one hand, it feels like much of AI x-risk is hubris — to think we could, in the near future, build an evil god-like being that could destroy humanity?! On the other hand, if you don’t peer under the hood, all AI demos (like the one for GPT-4o) seems to be geared towards feeding the fear of that dystopia. However, the reality of most AI safety teams seems to be that they monitor thought-crimes, in a very Orwellian twist.

May we live in interesting times

We are underestimating the second-order effects of Ozempic. The company producing it is already Europe’s largest company, and has surpassed Denmark’s GDP. We’ve all heard about fast food and grocery chains listing Ozempic / Wegovy as a business risk, but who could have predicted that there would be a marked rise of ointments for reducing stretch marks. In hindsight, it’s obvious. How many other non-obvious shifts are hiding in plain sight, especially given the adjacent health benefits? Culturally, will beauty standards or body positivity or food habits also change?

Side note: what does the success of Ozempic say about willpower?

This is not an ad, I swear

We’re also underestimating how big of a deal Tesla’s FSD is. Most people haven’t used it, obviously, and of those that have, many don’t cross the threshold to letting it take over — understandably, as it is has many edge cases and it is jarring when the car gets stuck in the middle of traffic. But if you can get used to the discomfort of letting a computer drive, it is game-changing. If you drive regularly, you don’t even realize how taxing it can be. Going from driving to watching the car drive opens up so much time and energy. I don’t use FSD all the time, but will turn it on for the regular commute or battling slow traffic etc. I cannot think of a tech product that made such a material difference to my life since getting a mobile phone for the first time. The experience is also not something you get with tech products — a friend felt like a computer was acting as an equal partner, while my analogy of letting it drive was with letting go of control when teaching someone how to drive.

That’s all for now. Next up: a brief essay about about how a civilization can lose knowledge and undo progress.